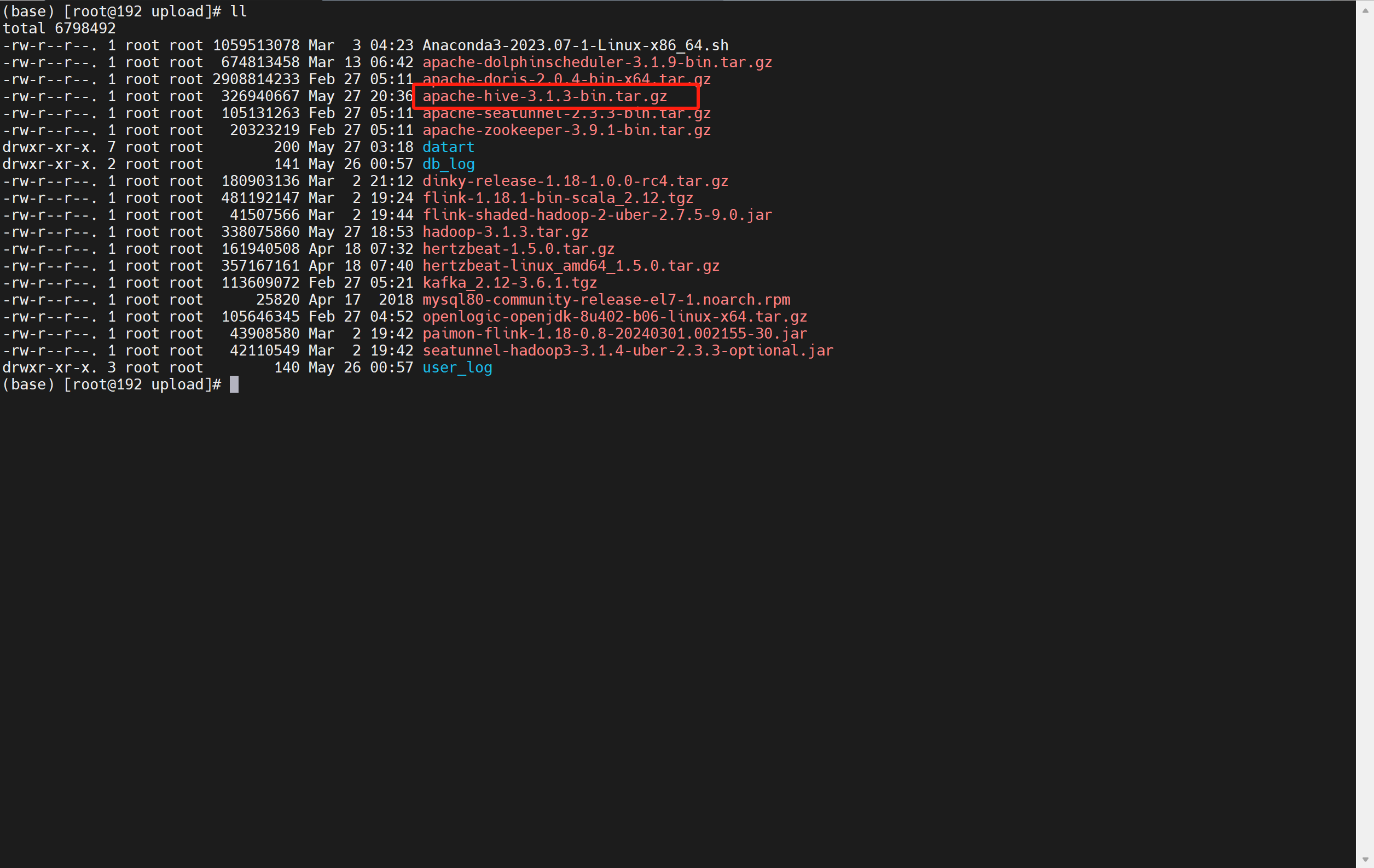

1. 上传apache-hive-3.1.3-bin.tar.gz到/opt/upload

2. 解压apache-hive-3.1.3-bin.tar.gz 到 /opt/software

tar -zxvf /opt/upload/apache-hive-3.1.3-bin.tar.gz /opt/software

3. 创建auxlib文件夹(为后面paimon集成hive做准备)

mkdir -p /opt/software/apache-hive-3.1.3-bin/auxlib

4. 上传paimon-hive-connector-3.1-0.7.0-incubating.jar到/opt/software/apache-hive-3.1.3-bin/auxlib

5. 修改hive-env.sh

vim /opt/software/apache-hive-3.1.3-bin/conf/hive-env.sh

添加以下内容

export HADOOP_HOME=/opt/software/hadoop-3.1.3

export HIVE_CONF_DIR=/opt/software/apache-hive-3.1.3-bin/conf

export HIVE_AUX_JARS_PATH=/opt/software/apache-hive-3.1.3-bin/auxlib6.修改hive-site.xml

vim /opt/software/apache-hive-3.1.3-bin/conf/ hive-site.xml

添加以下内容

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://192.168.244.129:3306/hivemetastore?createDatabaseIfNotExist=true</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>hivemetastore</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>hivemetastore</value>

</property>

<property>

<name>hive.cli.print.header</name>

<value>true</value>

</property>

<property>

<name>hive.cli.print.current.db</name>

<value>true</value>

</property>

<property>

<name>hive.metastore.uris</name>

<value>thrift://192.168.244.129:9083</value>

</property>

<property>

<name>hive.server2.webui.host</name>

<value>192.168.244.129</value>

</property>

<property>

<name>hive.server2.webui.port</name>

<value>10002</value>

</property>

<!--Hive 3.x 默认打开了ACID,Spark不支持读取 ACID 的 Hive,需要关闭ACID-->

<property>

<name>hive.strict.managed.tables</name>

<value>false</value>

</property>

<property>

<name>hive.create.as.insert.only</name>

<value>false</value>

</property>

<property>

<name>metastore.create.as.acid</name>

<value>false</value>

</property>

<!--关闭版本验证-->

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

</property>

<!-- 指定 hiveserver2 连接的 host -->

<property>

<name>hive.server2.thrift.bind.host</name>

<value>192.168.244.129</value>

</property>

<!-- 指定 hiveserver2 连接的端口号 -->

<property>

<name>hive.server2.thrift.port</name>

<value>10000</value>

</property>7.配置环境变量

vim /etc/profile

#HIVE_HOME

export HIVE_HOME=/opt/software/apache-hive-3.1.3-bin

export PATH=$PATH:$HIVE_HOME/bin刷新配置

source /etc/profile

8.初始化元数据库

schematool -dbType mysql -initSchema

9.启动matadata服务

hive --service metastore -p 9083 &

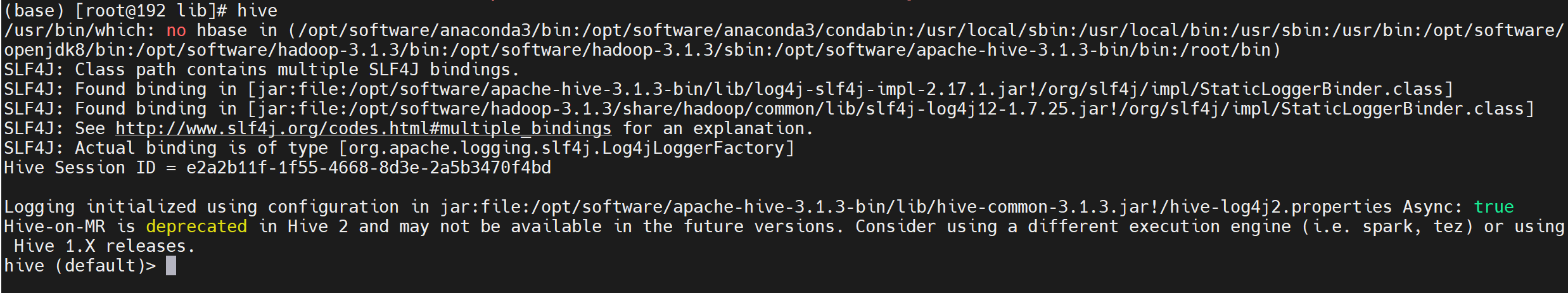

10. 运行hive