1 安装flink

参考2.12

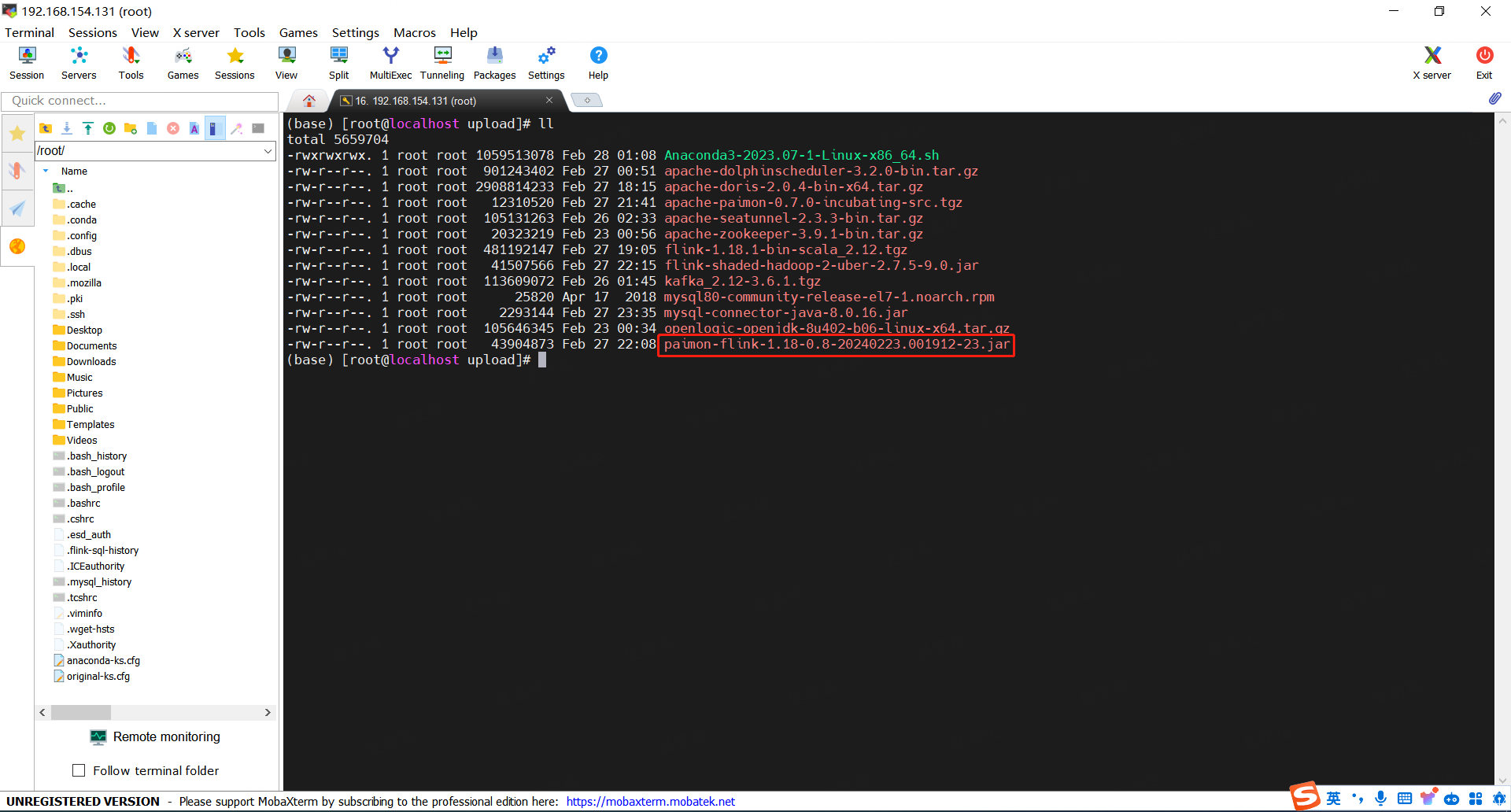

2 上传 Paimon Bundled Jar 到 /opt/upload

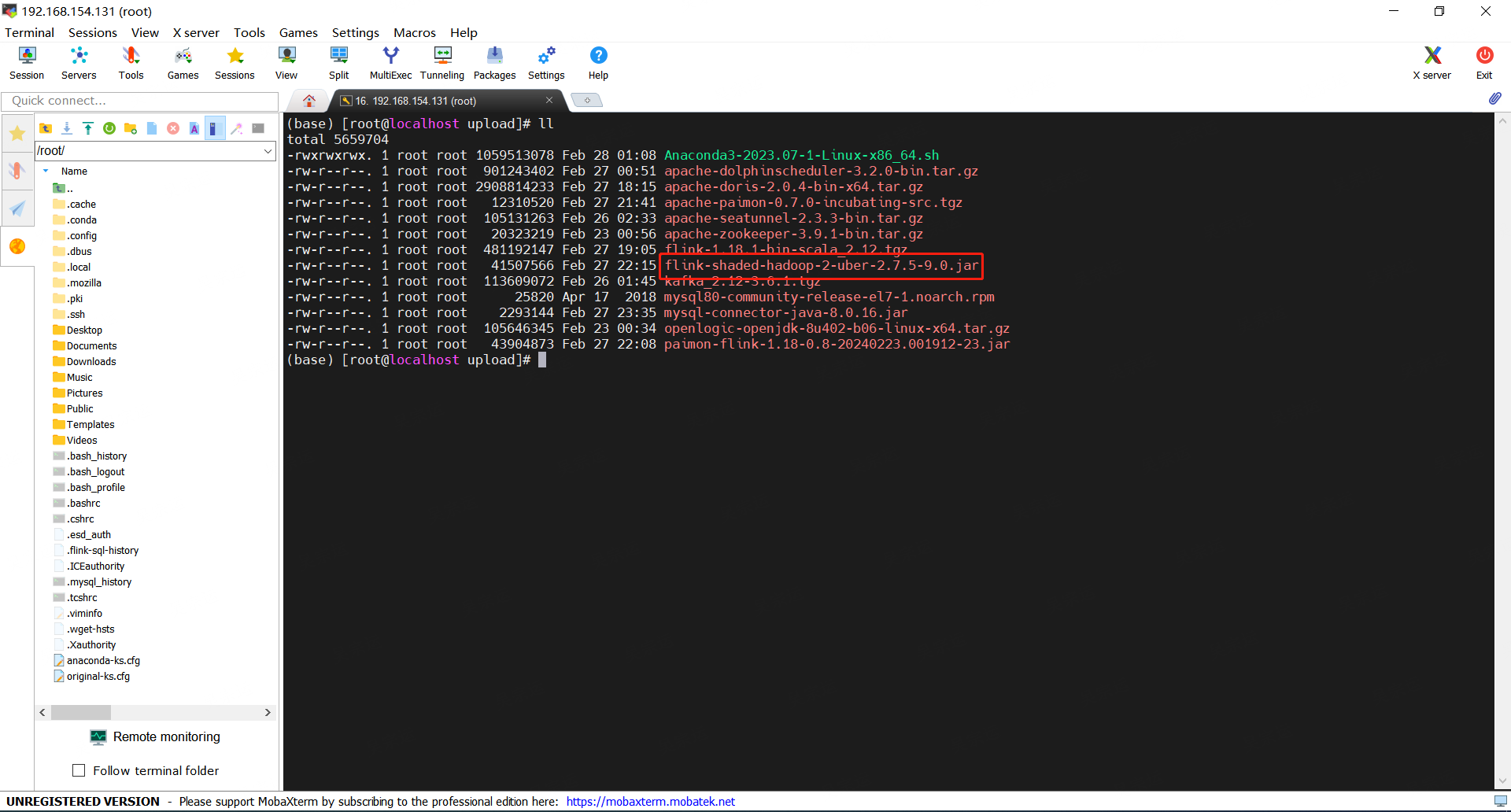

3 上传Hadoop Bundled Jar到/opt/upload

4 复制 Paimon Bundled Jar/Hadoop Bundled Jar 到/opt/software/flink-1.18.1/lib/

cp /opt/upload/paimon-flink-1.18-0.8-20240301.002155-30.jar /opt/software/flink-1.18.1/lib/

cp /opt/upload/flink-shaded-hadoop-2-uber-2.7.5-9.0.jar /opt/software/flink-1.18.1/lib/

5 启动flink

cd /opt/software/flink-1.18.1/bin

./start-cluster.sh

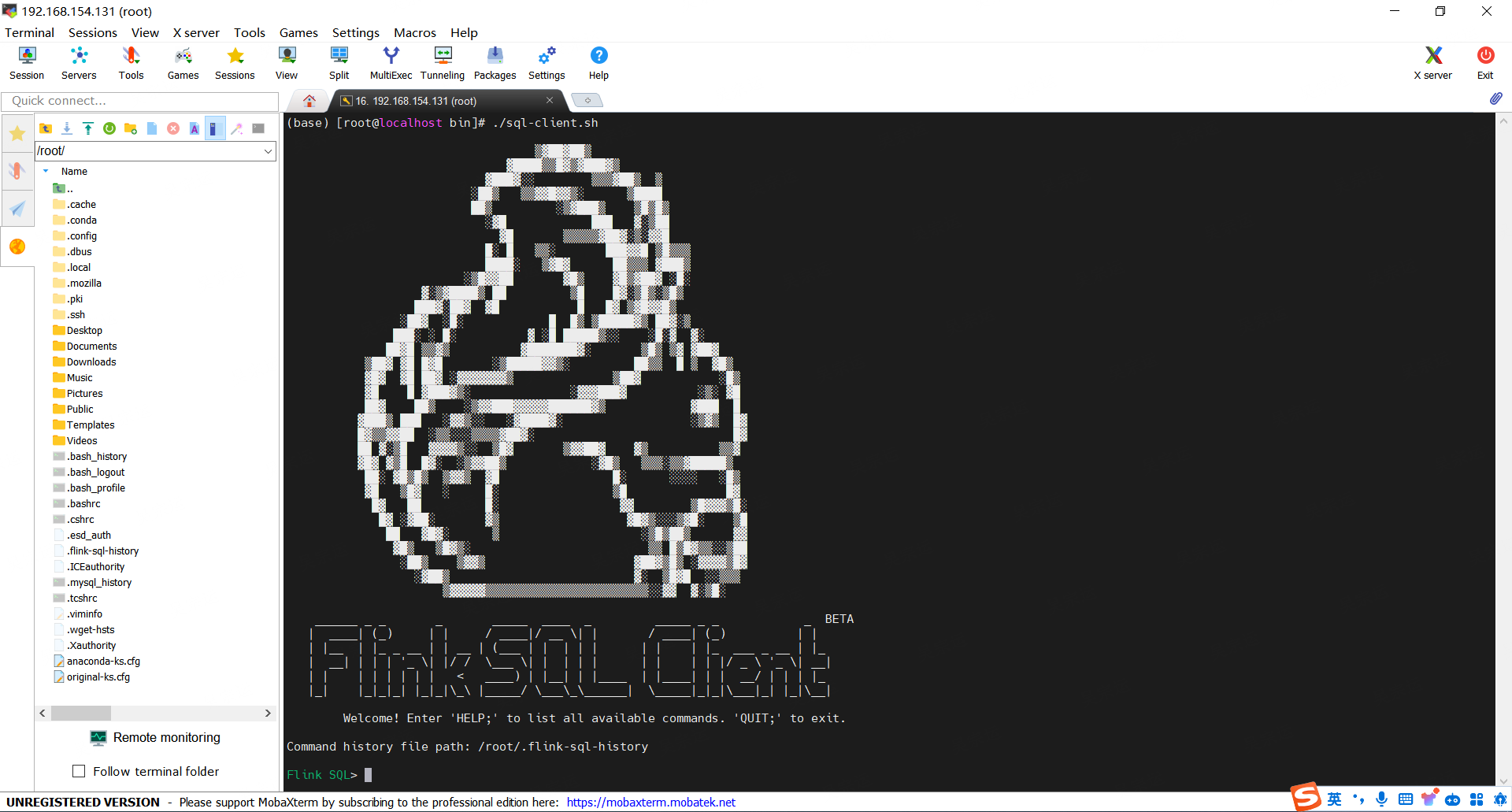

6 启动sql-client.sh

cd /opt/software/flink-1.18.1/bin

./sql-client.sh

7 创建 Catalog 和 Table

CREATE CATALOG my_catalog WITH (

'type'='paimon',

'warehouse'='file:/tmp/paimon'

);

USE CATALOG my_catalog;

CREATE TABLE word_count (

word STRING PRIMARY KEY NOT ENFORCED,

cnt BIGINT

);8 写入数据

CREATE TEMPORARY TABLE word_table (

word STRING

) WITH (

'connector' = 'datagen',

'fields.word.length' = '1'

);

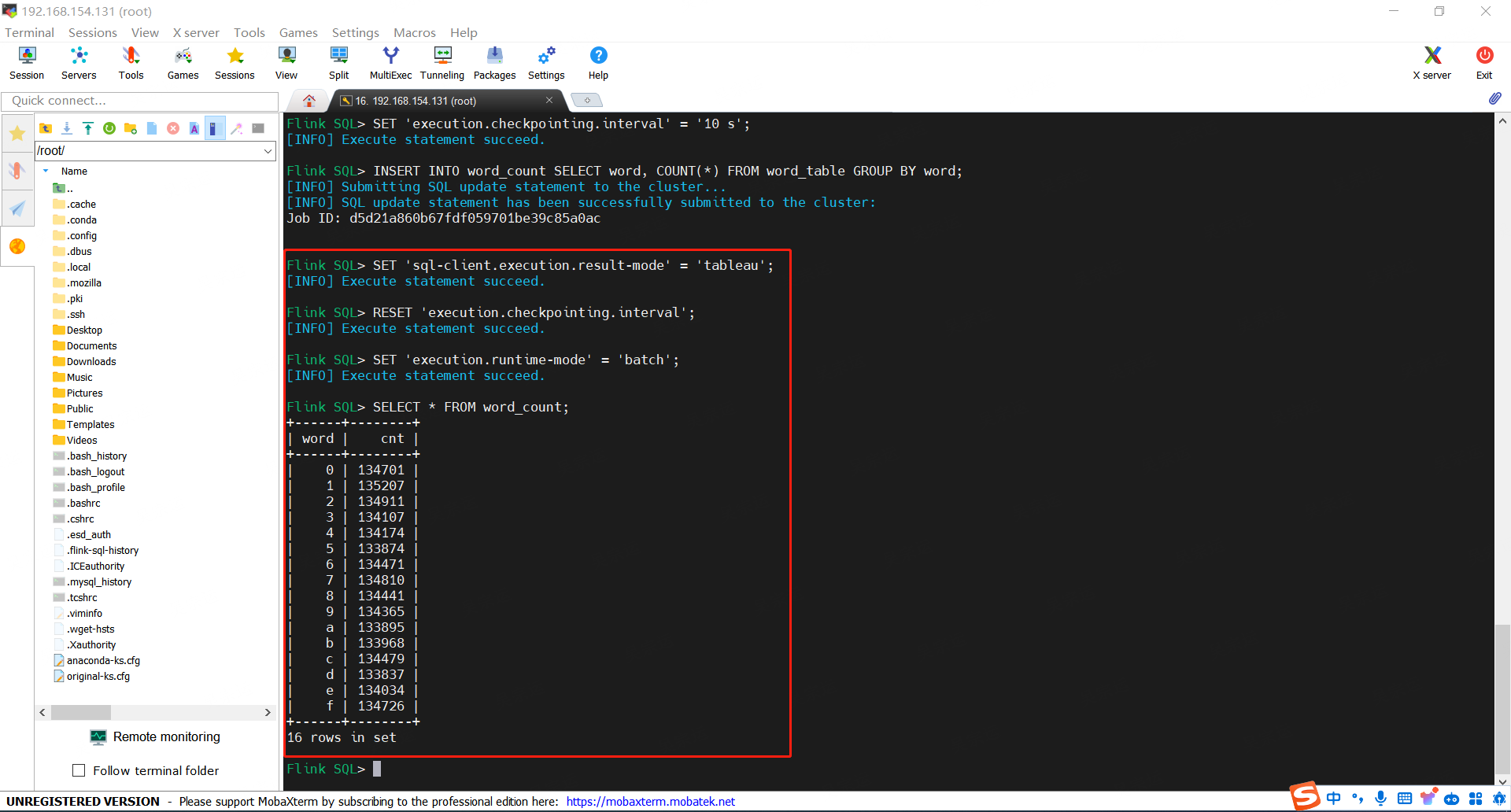

SET 'execution.checkpointing.interval' = '10 s';

INSERT INTO word_count SELECT word, COUNT(*) FROM word_table GROUP BY word;

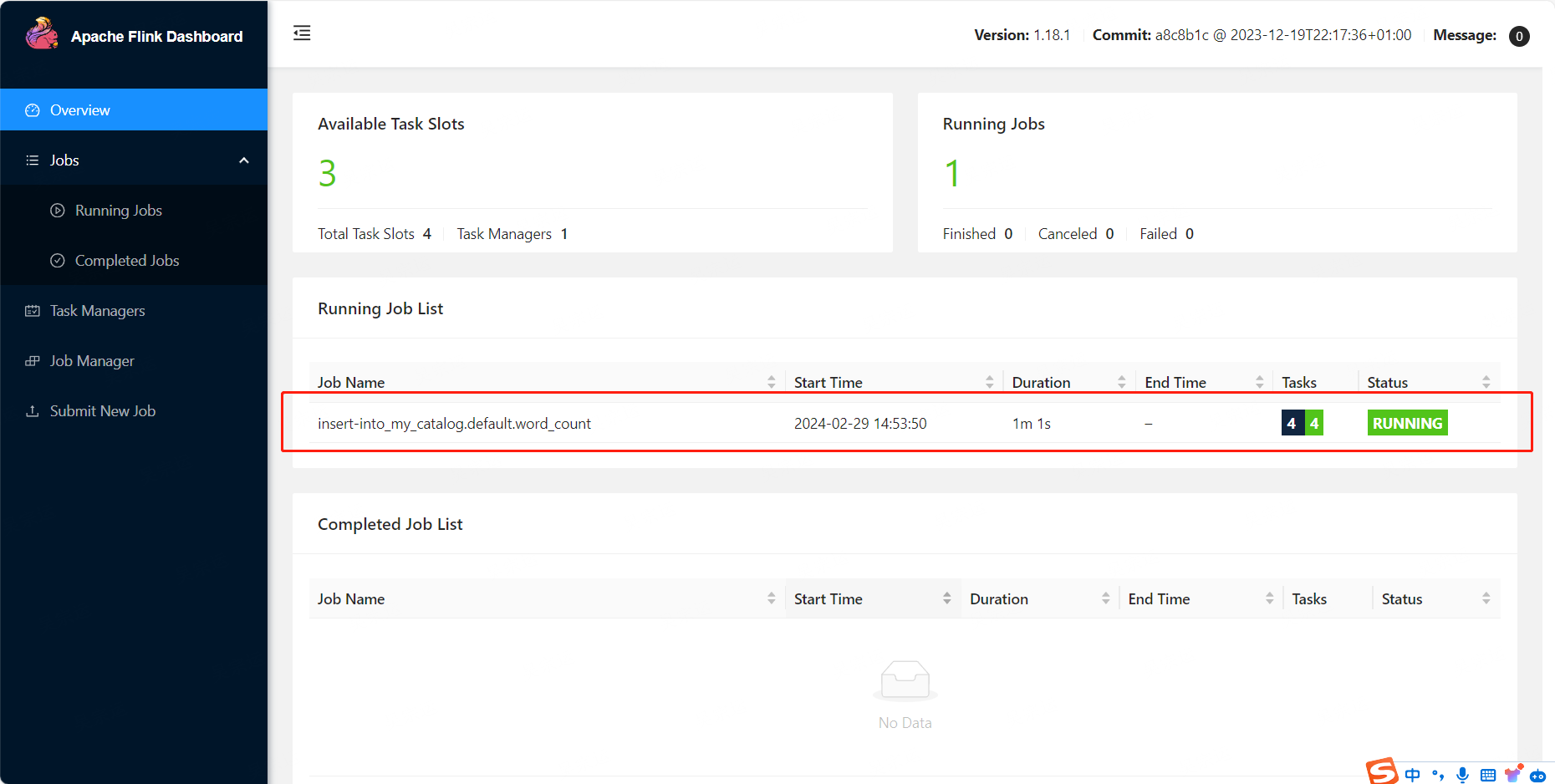

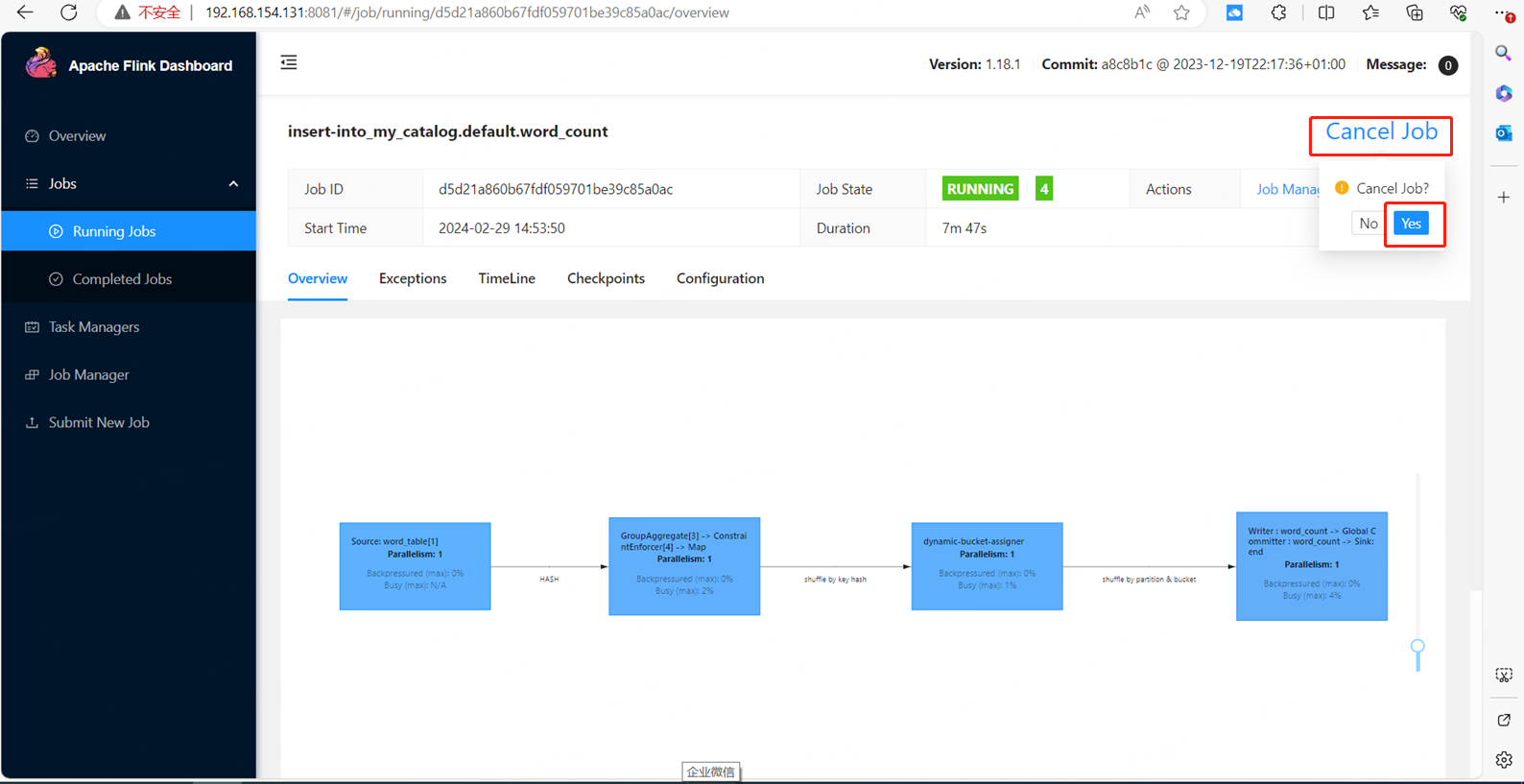

9 flink web页面 查看任务

http://192.168.154.131:8081

10 批查询

SET 'sql-client.execution.result-mode' = 'tableau';

RESET 'execution.checkpointing.interval';

SET 'execution.runtime-mode' = 'batch';

SELECT * FROM word_count;

11 流式查询

SET 'execution.runtime-mode' = 'streaming';

SELECT interval, COUNT(*) AS interval_cnt FROM

(SELECT cnt / 10000 AS interval FROM word_count) GROUP BY interval;

12 退出客户端

Ctrl + c

退出sql-client

quit;

13 停止任务

http://192.168.154.131:8081

点击Running Jobs 任务详情->点击cancel job->点击yes

14 停止flink集群

参考 2.12